In my previous blog post, I introduced the process and tools we’re using to publish a monthly curated news update about about water, sanitation and hygiene (WASH) as part of the work we are doing to support KM in the Building Demand for Sanitation (BDS) programme.

With 9 monthly issues published so far, how do we measure if this activity is working well, and grantees are using the curated news put together by their peers?

As we’re using several tools to run our Curated Updates, we also make use of several metrics and indicators that form our basic M&E system. But before we dive into this - and what we’ve learned from our own monitoring and evaluation - I think it’s important to mention that this activity is intended for a rather small audience, with an initial list of around 50 recipients and website that is purposely not indexed in search engines.

Nevertheless, some useful lessons can be identified also from a small project like this one, and maybe scaled up to larger initiatives.

Besides the generally positive informal and anecdotal feedback that we receive from users, we can also see that the they appreciate the service: the list of subscribers keeps growing, in spite of the fact that we don’t do any active promotion of this service. So the list growth is all driven by peer recommendations and suggestions, with subscribers encouraging their peers to register for the service as they find useful to receive a monthly newsletter with a few, key resources that are already filtered and digested by experts, and whose judgement they can trust.

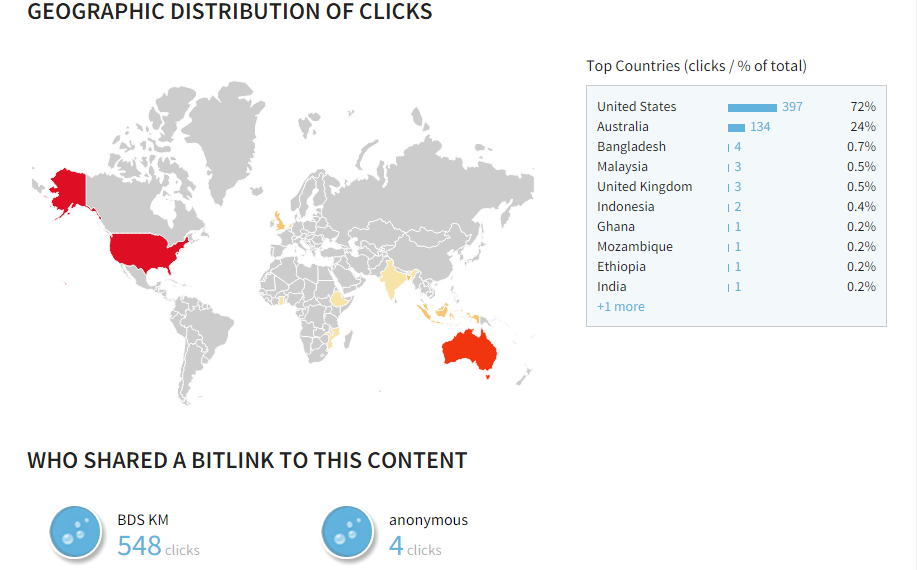

This gives a better idea of what resources resonate with our audience, where the click happens (if on the newsletter, on the website or elsewhere) and where the audience is located. Through Bitly, we can also see if the link is re-shared on other social media channels, allowing us to have better link tracking and potential insights into where our audience interact online.

As you can see, the number of votes remains low and we find it challenging to encourage users to make more use of this feature. However, we believe it remains a valuable addition to our M&E for this activity. Ideally, the perfect scenario would be to include the rating widget directly into the newsletter campaign, but this doesn’t seem possible from a technical point of view - or at least, we haven’t yet figured out how to do it!

We’re running the service for a year, which comes to an end in July 2015. As we review how it worked we’ll share what we learn and update our findings on this blog..

With 9 monthly issues published so far, how do we measure if this activity is working well, and grantees are using the curated news put together by their peers?

As we’re using several tools to run our Curated Updates, we also make use of several metrics and indicators that form our basic M&E system. But before we dive into this - and what we’ve learned from our own monitoring and evaluation - I think it’s important to mention that this activity is intended for a rather small audience, with an initial list of around 50 recipients and website that is purposely not indexed in search engines.

Nevertheless, some useful lessons can be identified also from a small project like this one, and maybe scaled up to larger initiatives.

How useful is the service?

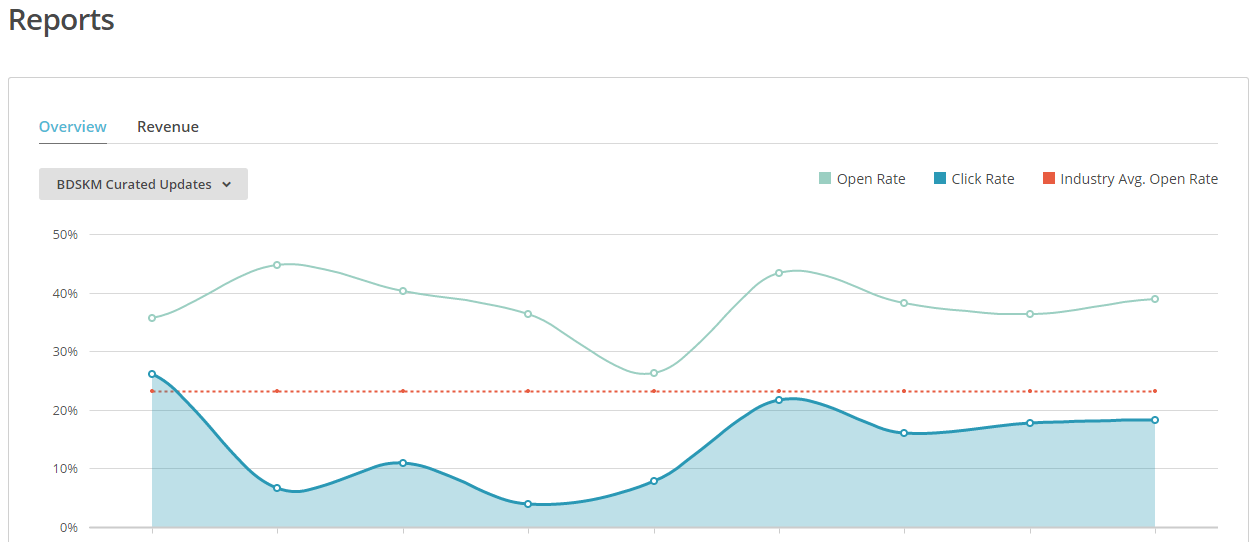

From what we can see, users make use of this curated news update. They do open the newsletter that hit their mailboxes regularly during the first week of each month. Out of the many different things you can monitor in MailChimp, we decided to focus primarily on open rate and click rate. As you can see from the image below, MailChimp provides you also with benchmarks and average for your industry, and we’ve been pretty happy to have an email open rate which has constantly been above what MailChimp consider the industry average.Besides the generally positive informal and anecdotal feedback that we receive from users, we can also see that the they appreciate the service: the list of subscribers keeps growing, in spite of the fact that we don’t do any active promotion of this service. So the list growth is all driven by peer recommendations and suggestions, with subscribers encouraging their peers to register for the service as they find useful to receive a monthly newsletter with a few, key resources that are already filtered and digested by experts, and whose judgement they can trust.

Are users clicking on the suggested links?

In general, resources shared through the newsletter are opened and users go and read the original document. MailChimp provides automatic tracking of the links included in any newsletter. However, for some technical reason, this didn’t work at first with the RSS driven campaign, and we failed to properly track clicks on links in the first few issues of this Curated Updates service. So while figuring out the issue (which we did eventually, thanks to the great MailChimp support team) we started using Bitly to shorten the links for each curated update resource included in the newsletter.This gives a better idea of what resources resonate with our audience, where the click happens (if on the newsletter, on the website or elsewhere) and where the audience is located. Through Bitly, we can also see if the link is re-shared on other social media channels, allowing us to have better link tracking and potential insights into where our audience interact online.

How valuable are the resources?

The RatingWidget Wordpress plugin allows us to get a better feeling of the usefulness and relevance of each single curated news item for the users, and to understand the appetite they have for different types of contents. As we’re using the pro-version of this plugin, the ratings are nicely collected and displayed in the RatingWidget dashboard, as illustrated in the image below.As you can see, the number of votes remains low and we find it challenging to encourage users to make more use of this feature. However, we believe it remains a valuable addition to our M&E for this activity. Ideally, the perfect scenario would be to include the rating widget directly into the newsletter campaign, but this doesn’t seem possible from a technical point of view - or at least, we haven’t yet figured out how to do it!

Publish, review, adapt, publish...

This activity has been planned around a “publish, review, adapt, publish” cycle for 12 months, and indeed for each of the issues we’ve been publishing so far we’ve been tweaking and adapting the newsletter, the way we present the content in it and online, as well as some of the tools we adopt to track users’ behaviours and preferences. Each time, we try to learn something and put it into use in the next issue - the curators from a content point of view, looking for resources that resonates more with the audience; and us from a technical point of view.We’re running the service for a year, which comes to an end in July 2015. As we review how it worked we’ll share what we learn and update our findings on this blog..